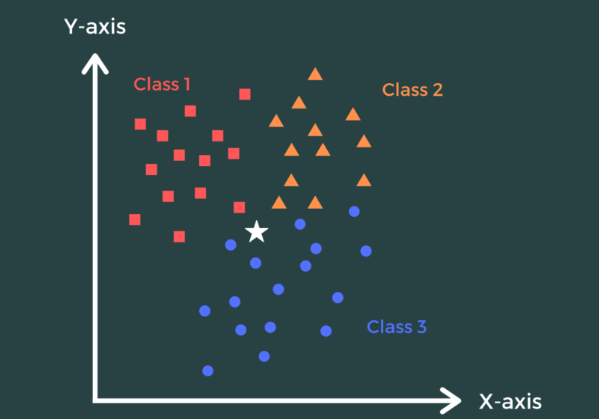

The K Nearest Neighbor (KNN) algorithm is a simple and intuitive supervised machine learning algorithm. It is used for both classification and regression tasks. K Nearest Neighbor (KNN) works based on the assumption that similar data points tend to belong to the same class.

Here’s how the KNN algorithm works:

- Training Phase: During the training phase, the algorithm simply stores the feature vectors and corresponding class labels (for classification) or output values (for regression) of the training dataset.

- Prediction Phase: When a new data point needs to be classified or predicted, the KNN algorithm follows these steps:

- Calculate the distance between the new data point and all the training data points. The most common distance metric used is the Euclidean distance, but other distance measures can also be used.

- Select the K nearest neighbors to the new data point based on the calculated distances. K is a user-defined parameter.

- For classification, determine the class label based on the majority vote of the K nearest neighbors.

- For regression, calculate the average or weighted average of the output values of the K nearest neighbors. This average becomes the predicted output value for the new data point.

The choice of K is crucial in the KNN algorithm. A smaller K value tends to make the decision boundary more flexible and prone to noise, while a larger K value makes the decision boundary smoother but may lead to a loss of detail.

Some important considerations for using the KNN algorithm include:

- Feature scaling: Since KNN relies on distance measures, it is important to scale or normalize the features to ensure that no single feature dominates the distance calculation.

- Handling categorical features: To enable distance calculations, it is necessary to properly encode or transform categorical features into numerical values.

- Handling imbalanced data: In classification tasks, imbalanced class distributions can affect the majority voting. To address this issue, one can utilize techniques such as weighted voting or resampling.

Overall, the KNN algorithm is easy to understand, interpretable, and applicable to various domains. However, it can be computationally expensive for large datasets, as it requires calculating distances for each new data point against all training data points.

Leave a Reply